Most simple integration systems use files with various formats to move data. Client drops a file to a folder for processing and your system takes that to update account balance, send email to bunch of people or send work orders. When you are working with files, you can use integration service's drag and drop features to create an integration package. BI development studio is very good for designing control flow items, data flow items and overall database integrations.

I was almost to give 5 star rating to script task feature in there, but it is hard to debug and work with. It is a good flexiblity to add your C# code inside the package you are building. It must be hard to maintain all those script code and then debug production problems.

Here are the steps to make a package for monitoring a directory and then importing data from each file.

Step1: Create a new Integration Services Project from SQL Server business intelligence development studio

Step2: Drag and drop Foreach Loop Container to Control flow

Step3: Right click in the control flow screen and select variables option to define the following variables.

ImportFolder has predefined value and we will not change that in this demo. Other values will change

Step4:We will be processing each file in our "ImportFolder" directory and recording file info. We will use SqlTask to insert queries, Script Task to get file info and BulkInsert to add records. Now, we will drag and drop SQL Task, Script Task, another Sql Task, Bulk Insert Task, and final File System Task. You should see this screen after dropping all those.

Step5:Now we need to define our directory folder for "FOREACH Loop container". Set foreach container enumerator to File enumerator and then click expressions. You can set property column to "Directory" from dropdown and set value to @[User::ImportFolder]

This will set the directory to our import folder variable.

Step6: We need to set read only and read write variables for script editor.

ReadOnlyVariables: User::ImportFileName

ReadWriteVariables:User::ImportFileShortName,User::ImportFileSize

You need to click "edit script" button to open script editor. I put file operations into script task to use script feature.

You can call variables with Dts.Variables["ImportFileName"].Value and use the same syntax to set the value. You should specify these variable in the readonly and readwrite variable list.

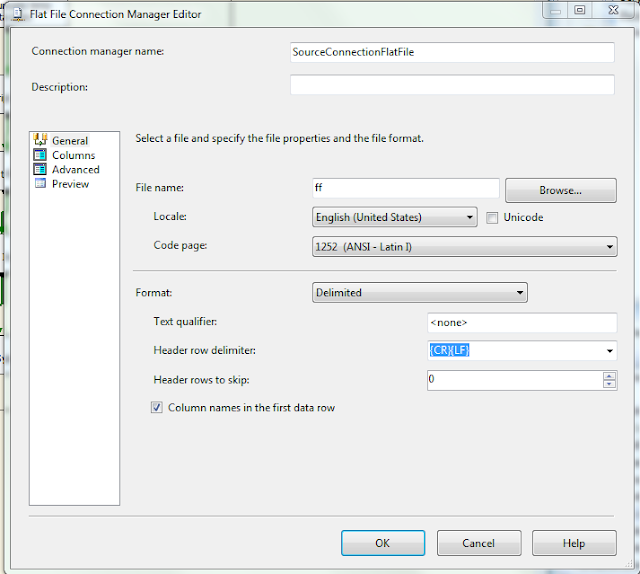

Step8: Next task is to call bulk insert to move data into temporary table. We need to define file source. You can add flat file source to use in bulk insert operation. Right click then select new connection. If you select flat file source, this screen will pop up.

File name is not important, because we will map to our variable.

Click flat file properties in the control flow screen and set connection string to "@[User::ImportFileName]"

Drag and drop bulk insert operation. You need to set source connection to your flat file source.

Set connections to your database and also set the destination table. Destination table should have same columns as your data file if you are importing data with default settings.

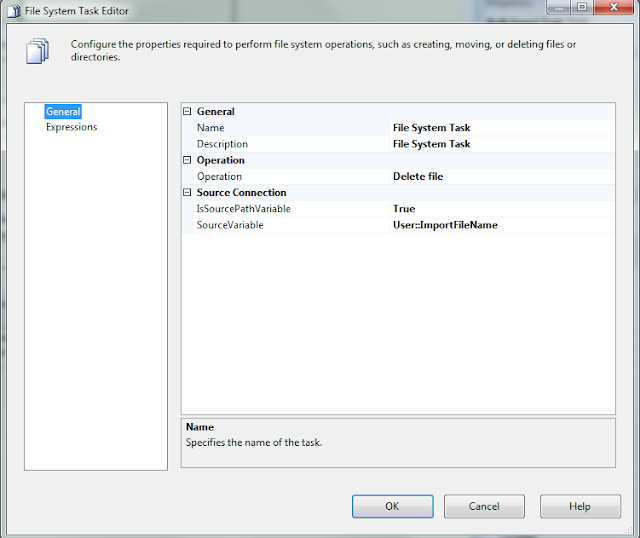

Step9: Next step is to delete the data file after processing. We will add File System Task to delete a file.

Source variable "User::ImportFileName" was populated from Foreach container.

This loop will run for each file in the specified folder and execute script and sql queries. We used sql task, file system task, Bulk insert, Script task and iterator.

You can use other tasks to add more features.

I was almost to give 5 star rating to script task feature in there, but it is hard to debug and work with. It is a good flexiblity to add your C# code inside the package you are building. It must be hard to maintain all those script code and then debug production problems.

Here are the steps to make a package for monitoring a directory and then importing data from each file.

Step1: Create a new Integration Services Project from SQL Server business intelligence development studio

Step2: Drag and drop Foreach Loop Container to Control flow

Step3: Right click in the control flow screen and select variables option to define the following variables.

- FileRecordId: to use as a reference to your file record in the file reference table,

- ImportFileName: name of the file that we will record into table

- ImportFileShortName: only filename

- ImportFileSize: Script Task will update this variable for file size.

- ImportFolder: to define folder for our Foreach loop

ImportFolder has predefined value and we will not change that in this demo. Other values will change

Step4:We will be processing each file in our "ImportFolder" directory and recording file info. We will use SqlTask to insert queries, Script Task to get file info and BulkInsert to add records. Now, we will drag and drop SQL Task, Script Task, another Sql Task, Bulk Insert Task, and final File System Task. You should see this screen after dropping all those.

Step5:Now we need to define our directory folder for "FOREACH Loop container". Set foreach container enumerator to File enumerator and then click expressions. You can set property column to "Directory" from dropdown and set value to @[User::ImportFolder]

This will set the directory to our import folder variable.

Step6: We need to set read only and read write variables for script editor.

ReadOnlyVariables: User::ImportFileName

ReadWriteVariables:User::ImportFileShortName,User::ImportFileSize

You need to click "edit script" button to open script editor. I put file operations into script task to use script feature.

You can call variables with Dts.Variables["ImportFileName"].Value and use the same syntax to set the value. You should specify these variable in the readonly and readwrite variable list.

public void Main() { Dts.Log("start script task", 999, null); //get file size string filepath = (string)Dts.Variables["ImportFileName"].Value; if (File.Exists(filepath)) { Dts.Log("File exists", 999, null); //get size FileInfo flatfileinfo = new FileInfo(filepath); Int32 filesize = (Int32)(flatfileinfo.Length/1000); Dts.Variables["ImportFileShortName"].Value = flatfileinfo.Name; //write this size Dts.Variables["ImportFileSize"].Value = filesize; Dts.TaskResult = (int)ScriptResults.Success; Dts.Log("finished script task", 999, null); return; } Dts.TaskResult = (int)ScriptResults.Failure; Dts.Log("failed script task", 999, null); }Step7: We will use SQL task to insert file info to the filerecord table.

Ole Db is using Question mark to identify input variables. You need to map variables in parameter mapping screen.

Query:

INSERT INTO [dbo].[CustomerFileRecords] ([CreatedOn] ,[FileSize] ,[Name] ,[TotalLines] ,[ReadStart] ,[ReadEnd] ,[ImportedRecords] ,[ErrRecords] ,[Comment] ,FullName) VALUES (getutcdate() ,? ,? ,0 ,getutcdate() ,null ,null ,null ,'add file info' ,?) declare @i int set @i = scope_identity(); select @i as FileId This simple query inserts a record into File record table about the file size, file name and file path. You are taking these information from Script Task and assigning to variables. Query task is using same variables to create a record.

Step8: Next task is to call bulk insert to move data into temporary table. We need to define file source. You can add flat file source to use in bulk insert operation. Right click then select new connection. If you select flat file source, this screen will pop up.

File name is not important, because we will map to our variable.

Click flat file properties in the control flow screen and set connection string to "@[User::ImportFileName]"

Drag and drop bulk insert operation. You need to set source connection to your flat file source.

Set connections to your database and also set the destination table. Destination table should have same columns as your data file if you are importing data with default settings.

Step9: Next step is to delete the data file after processing. We will add File System Task to delete a file.

Source variable "User::ImportFileName" was populated from Foreach container.

This loop will run for each file in the specified folder and execute script and sql queries. We used sql task, file system task, Bulk insert, Script task and iterator.

You can use other tasks to add more features.

No comments:

Post a Comment

Hey!

Let me know what you think?